In this article I’d like to discuss how logging can be implemented in a generic way without polluting the domain logic with all kinds of logging mechanisms. This is part of a series of articles about how microservice architecture can be applied in a domain centric way without constantly dealing with technical aspects.

If you haven’t done it yet, I recommend checking my other articles in this series.

First of all let’s clear what are logs and logging in software development.

Logs are basically records holding one or more state attributes of the system at a given time structured in the form of events, hence logging is the act of writing these logs, typically to a log file or to a log analytics storage. Do not confuse logs with metrics, which are point in time measurements of individual system parameters.

These logs are essential to be able to inspect the behavior of the system at a given time, without the need to have access to the system itself. This is many times the case for production environments, where reproducing the issue and debugging is not an option.

While writing logs is relatively simple either you use a local log file or some log analytics storage such as Applicaiton Insights or Loki-Grafana, integrating them in the application infrastructure to write informative and relevant information, can be a real challenge.

As for the integration, I think it is better to implement logging separated from the business domain and only in a few generic components that are wrapping the different types of processes. In my article about distributed processes, I already discussed that abstracting these processes in the form of domain events (process input) and their handlers (processor) allows you to create generic wrappers over every process, using an event-subscriber, a command-dispatcher or an RPC-handler.

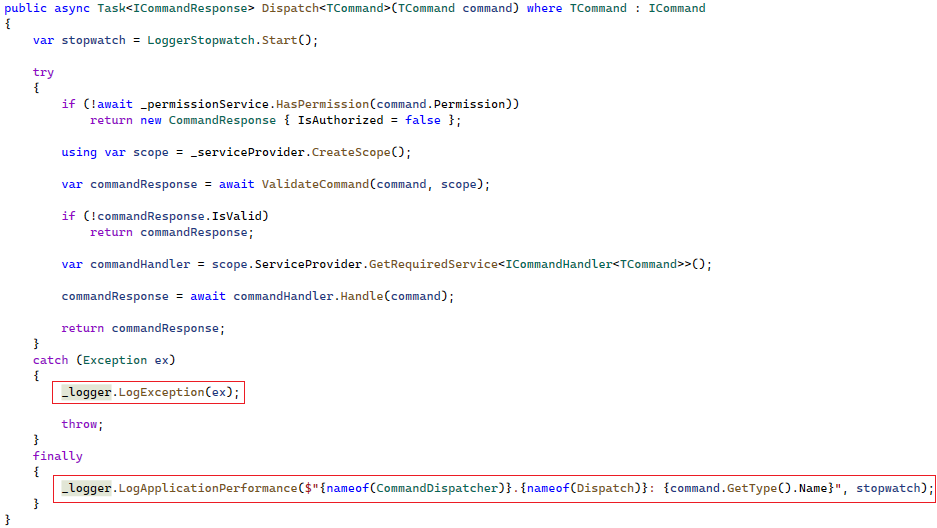

The code below is an example of a command dispatcher which wraps all the processes triggered by external events(commands) and the generic logging which tracks contextual information and performance counters. The exception logs will also contain the stack trace pointing directly to the exception cause.

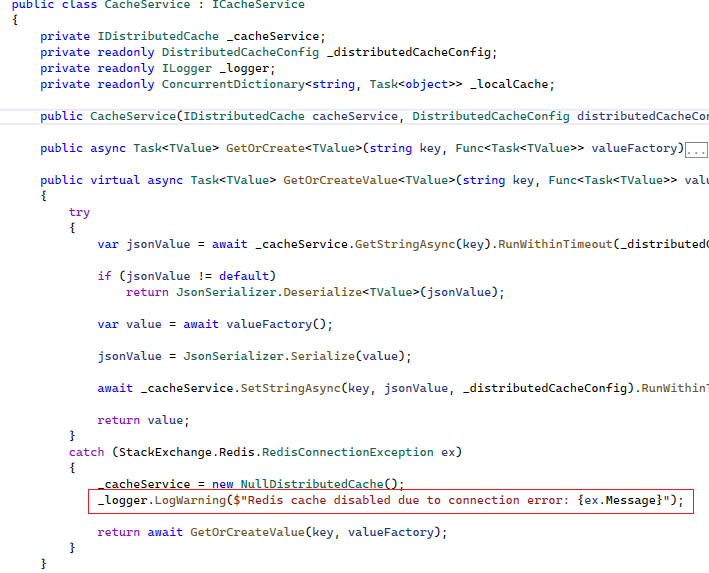

For logs that don’t track the execution of a process but a specific subtask, like calling an external service or monitoring the performance of the caching layer, it’s convenient to log in the generic infrastructure components like a cache client or a RPC invoker.

Monitoring the system’s behavior is often more than just looking at a single log record, but instead it requires to inspect a series of logs related to the same process or to a distributed flow in order to create a picture of what has happened. This is why correlating logs is extremely important, together with the capability to exclude the irrelevant ones from the thousands of logs that a system can produce every minute.

While logging in generic infrastructure components which still allows tracking process specific information from the domain event or the event handlers, it also creates the ground to give a common structure for the logs, and enhance them with contextual information like correlation id, tenant or service name etc. These custom properties can be used to better understand the systems behavior or to design on top of them a visual representation, like a dashboard built with Azure Log Analytics Workspace and Kusto queries or Grafana backed to Loki.

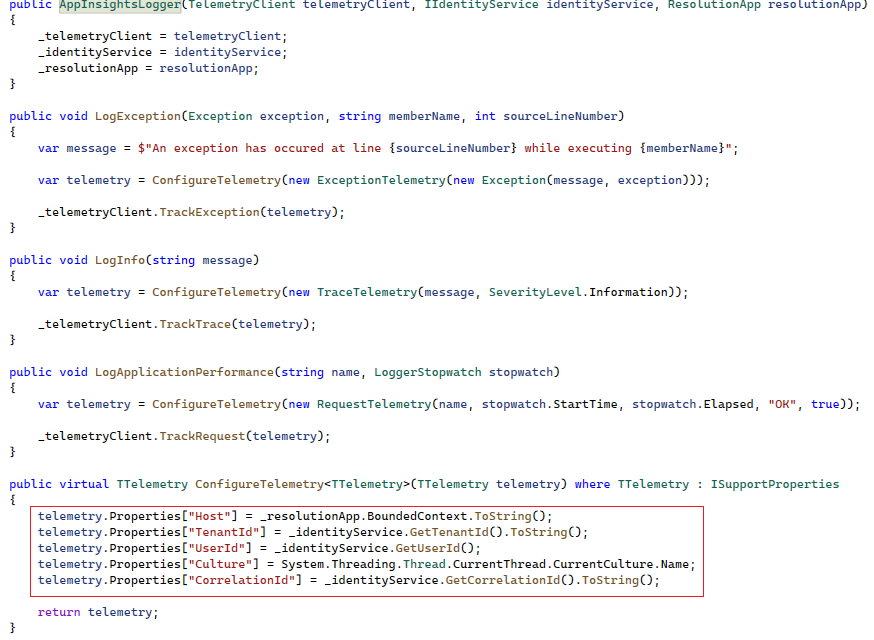

An example of a logger service enhancing logs with contextual information:

Sometimes it’s also necessary to add logs to track information from the business domain, like results of calculation or outcome of logical conditions, however these logs will degrade the signal to noise ratio of your code. I recommend adding them only where/until they are needed. A practice I found very efficient when investigating live issues that require additional logs, is to add them directly to the release branch deployed to the environment producing the issue, without merging them back to the main branch, this way newer releases will no longer have the logs added temporarily for the particular problem.

I hope with the above examples I managed to highlight some basic concepts that allow simplifying logging, implemented in generic infrastructure components given by the process abstraction, which ads only the valuable information following the principle of essentialism, while keeping the logging separated from the business domain. Changing the logs at system level will require a minor effort as they are only added to a few places.