In this article I’d like to discuss how caching can be simplified by abstraction in a way in which it extends the existing behavior without modifying it . This is part of a series of articles about how microservice architecture can be applied in a domain centric way without constantly dealing with technical aspects.

If you haven’t done it yet, I recommend checking my other articles in this series.

First of all, we should clarify a bit what caching is, to be able to explore its full potential.

Caching is a high-speed data storage layer which stores data, typically transient in nature, so that future requests for that data are served up faster than is possible by accessing the data’s primary storage location

This is a definition that everybody knows and I agree about what it says, but I feel it is misleading. It states that it’s a transient high speed storage which must be faster than the primary storage of the data, which is true. From my experience it’s a bit misleading because it also states you should use this to store data somewhere else then the primary storage (e.g. SQL) as long as it’s faster. Which makes people think that it should be used to improve the storage layer by caching the content of certain tables or the result of queries.

I saw a couple of implementations which were tights to the data access layer, even to the ORM itself, however I think, in order to be able to explore the potential of caching the technical solution should more versatile, and meet these expectations:

- The caching service should allow storage and retrieval of the data faster than the primary storage

- The caching layer should be capable of storing the result of complex calculations, together with database query results, depending on where it is applied.

- The presence of caching should tune the performance of the system, but should not alter its behavior. Therefore enabling/disabling the caching layer should not result in any change in the system’s functionality.

- Neither the primary storage nor the consumer of the data or calculation should know about the existence of a caching layer to avoid coupling them.

The above things are cool, but you may think it’s really hard to achieve, or they require a continuous investment and repetitive work whenever a new set of data has to be cached.

I think that is not true, at least in the way I implemented caching in several projects in the past years, using thedecorator design pattern.

The design pattern describes a solution about how to extend the behavior of a component by wrapping into another component which in our case will be the caching layer. Lets see how this looks like when implemented in a system that already usesInversion of Control and Dependency Injection

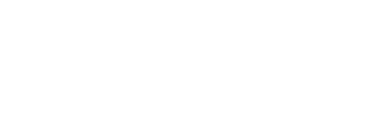

First of all let’s have a repository that returns the data from the primary storage

The above service is an example of a repository abstracting the data layer and exposing data through the ITenantRepository interface.

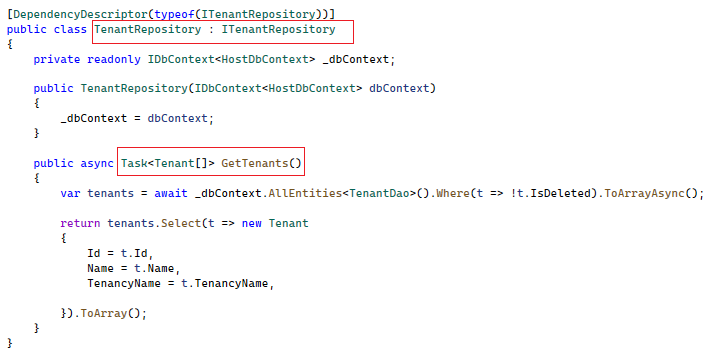

Then followed by the consumer of the repository, which has no direct reference to the implementation of the repository but only to an interface acting as a façade.

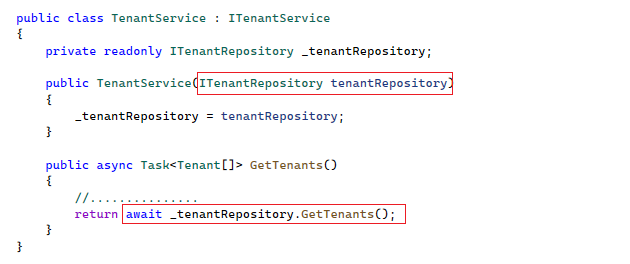

Now let’s see how the decorator design pattern allows wrapping the tenant repository into a new component without changing the tenant repository or any of its caller. This is my favorite way to meet the Open-closed principle, from SOLID principles.

As you can see the TenantRepositoryCache is implementing the same ITenantRepository interface as the repository itself, but it also gets a dependency of the same type injected.

The trick is that, whoever gets injected the ITenantRepository as a dependency, will get an instance of TenantRepositoryCache, except the TenantRepositoryCache itself, which will get an instance of TenantRepository. In my implementations this is done by a custom tool implemented on top of IServiceCollection which understands the DecoratorDescriptor attribute, but you can achieve this in multiple ways.

By taking a closer look at the tenant repository we can notice that the list of tenants are returned from cache. using the key “Tenants”, if the cache doesn’t contain a value for the given key it will call the repository and save its result in the cache before returning the tenants. Invalidating the cache is simply done by removing the cached value using the same key as for the retrieval.

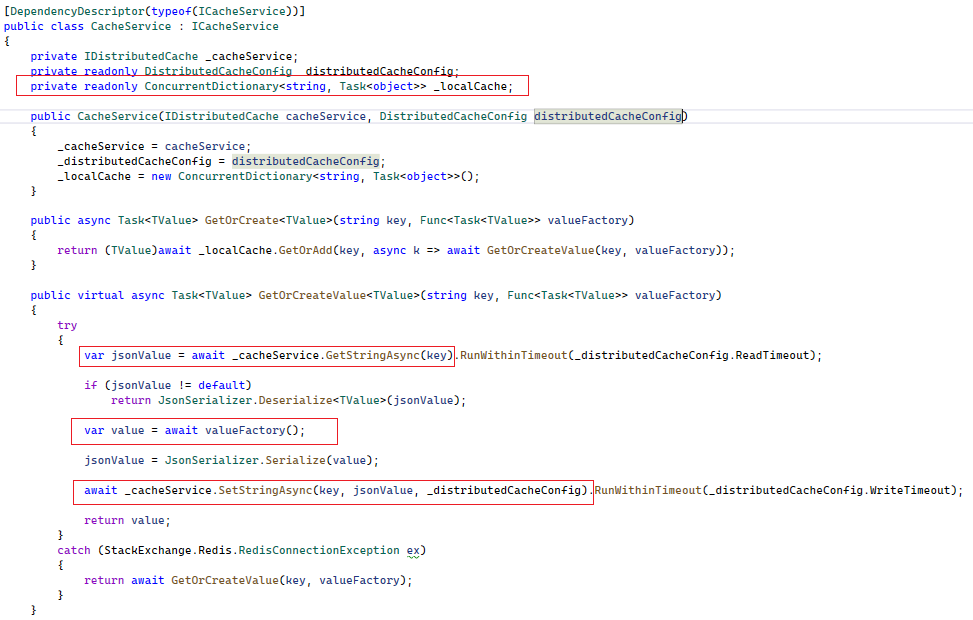

So basically the abstraction of the cache service exposes two methods: GetOrAdd and RemoveValue, and here is its implementation:

Because the purpose of the cache is to improve the system’s performance not to replace its primary storage, the above implementation abandons the call after a couple of milliseconds (RunWithinTimeout) to not introduce an unnecessary delay when the cache service is unavailable.

Another thing you may have noticed in the above code, is a fine tuning, to keep the cached values in an in-memory collection to prevent calling the cache multiple times within the same process (e.g. http requests), this can be very beneficial when having a complex logic composed by multiple domain services that consume the same data. Just make sure that the lifetime of the cache scoped to the process.

To considering whether to cache or not cache a set of data I usually consider the followings:

- If the data is used often and there is a high probability that multiple processes will request the same value within a short time

- How often the data is changing, read only data or results of idempotent calculations are typical candidates for caching.

- Whether the system can handle an eventually consistent data model until the cache expires, roles and permissions are often classify in this category

- What is the size of the data, because caching large collections reduces the probability of hits in the cache.

I hope that through the above implementation I managed to demonstrate how abstraction helps to reduce the complexity introduced by caching, having generic components that handle all the technical aspects and only requiring to implement a decorator that enriches the service of which performance’s needs to be improved. This solution is generally applicable, and has no aspects specific to microservices.