This is Part II of a sequel presenting the implementation of the Command Pattern in web APIs abstracting the hosting platform, so that extending the API with new functionality doesn’t require to carry any infrastructure code and it can run in Azure functions and Minimal API as well. If you haven’t done it yet, check the Part I of this sequel.

In the first part, we already hosted an API which maps Http requests to Command and Query handlers eliminating any repetitive infrastructural code.

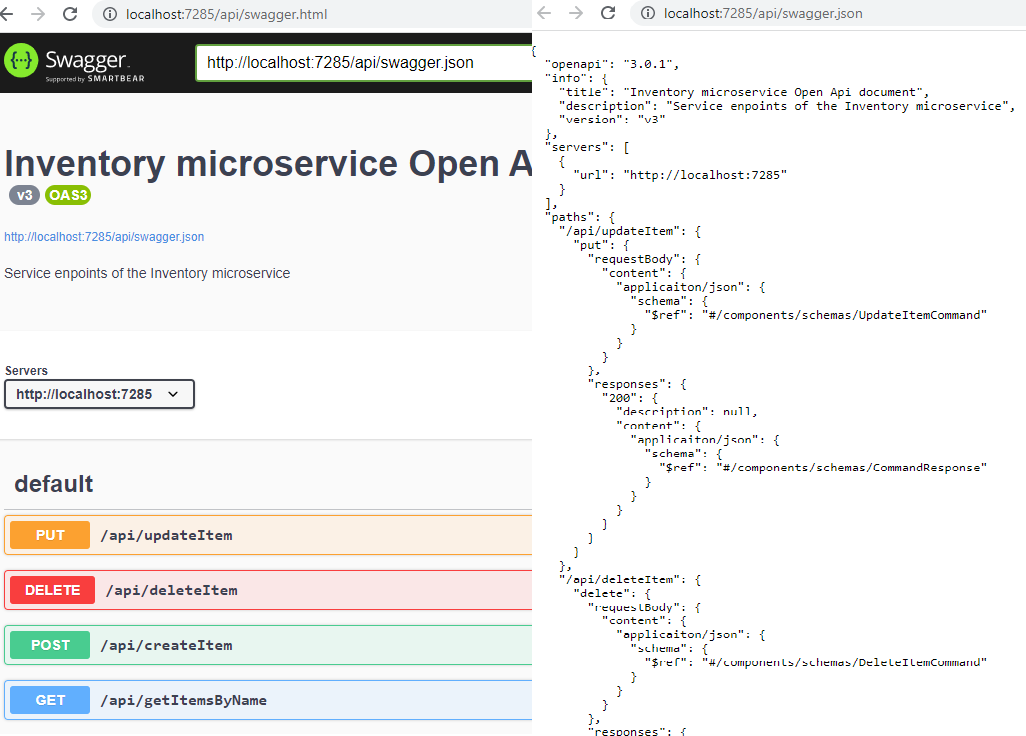

As the result of the implementation, if we have a query called GetItemsByName with a Name property, and a query handler for it, our api automatically invokes the query handler as a response to the url “/api/getItemsByName?name=N1”. This already meets the first two of our objectives.

Objectives

1. Be able to build extensible APIs without repetitive infrastructure components just by defining commands and command handlers.

2. The same solution should work in different hosting platforms without changing the business domain.

3. The API must expos an Open API definition for every endpoint (swagger) in order to make the API discoverabe.

4. Both azure and local environments should use the same infrastructure implementations.

5. Implementation for cross cutting concerns such as logging, exception handling authorization should be implemented without mixing with the business domain.

The next one is to create an Open API Json so that we can expose the metadata of our API making it discoverable for callers. Another practical advantage of having the Open API definition is that we can use it to import in Postman or API Management and also make it available for code generator tools.

Creating the Open API Document

In the previous article we already seen the mapper between the commands and requests, now we have to pick the work from there and create paths for every of those map items. This can be done by using a custom DocumentFilter which gets the RequestMap, in my case is the CommandToApiEndpointFilter:

public void Apply(OpenApiDocument swaggerDoc, DocumentFilterContext context)

{

swaggerDoc.Paths.Clear();

var commandToApiMaps = _commandToApiMapper.GetRequestMap();

foreach (var commandToApiMap in commandToApiMaps)

{

var responseSchema = context.SchemaGenerator.GenerateSchema(commandToApiMap.ResponseType, context.SchemaRepository);

var response = new OpenApiResponses { { "200", new OpenApiResponse { Content = new Dictionary<string, OpenApiMediaType> { { "applicaiton/json", new OpenApiMediaType { Schema = responseSchema } } } } } };

var apiPath = new OpenApiPathItem { Operations = new Dictionary<OperationType, OpenApiOperation> { { commandToApiMap.OperationType, new OpenApiOperation { Responses = response } } } };

var requestSchema = context.SchemaGenerator.GenerateSchema(commandToApiMap.RequestType, context.SchemaRepository);

apiPath.Operations[commandToApiMap.OperationType].RequestBody = new OpenApiRequestBody { Content = new Dictionary<string, OpenApiMediaType> { { "applicaiton/json", new OpenApiMediaType { Schema = requestSchema } } } };

swaggerDoc.Paths.Add($"/api/{commandToApiMap.RequestName}", apiPath);

}

}The above implementation only supports o receive data in the request body and bind to a command object, however some servers restrict Get requests with a body, so we should use query (alternatively header) parameters instead to improve our rest compliance. In order to support query parameters the lines 15-16 are changing this way:

if (commandToApiMap.OperationType == OperationType.Get)

{

var schemaRepository = new SchemaRepository();

var requestSchema = context.SchemaGenerator.GenerateSchema(commandToApiMap.RequestType, schemaRepository);

var parameters = GetQueryParameters(requestSchema, schemaRepository);

apiPath.Operations[commandToApiMap.OperationType].Parameters = parameters;

}

else

{

var requestSchema = context.SchemaGenerator.GenerateSchema(commandToApiMap.RequestType, context.SchemaRepository);

apiPath.Operations[commandToApiMap.OperationType].RequestBody = new OpenApiRequestBody { Content = new Dictionary<string, OpenApiMediaType> { { "applicaiton/json", new OpenApiMediaType { Schema = requestSchema } } } };

}And check a fragment of the Open API document for the GetItemsByName query handler:

"/api/getItemsByName": {

"get": {

"parameters": [

{

"name": "name",

"in": "query",

"schema": {

"type": "string",

"nullable": true

}

}

],

"responses": {

"200": {

"description": null,

"content": {

"applicaiton/json": {

"schema": {

"$ref": "#/components/schemas/QueryResultOfListOfItem"

}

}

}

}

}

}

}Bind query string to Query object

Now we have to make sure we can bind query strings to Query objects. There are a few nuget packages available for this, but they do not support either complex object structures, arrays or parameter binding. My choice is to convert the query string to json so that the same type converters can be applied as for output serialization or command binding. This is esential if you need to support custom date formats or Enum serialization applied at API level.

public static JsonObject QueryStringToJson(this IEnumerable<KeyValuePair<string, StringValues>> queryParameters)

{

var json = new JsonObject();

var queryParametersByKey = queryParameters.GroupBy(k => k.Key[0..Math.Max(k.Key.IndexOf('.'), 0)]);

foreach (var parameterGroup in queryParametersByKey)

{

if (parameterGroup.Key != string.Empty)

{

json.Add(parameterGroup.Key, QueryStringToJson(parameterGroup.Select(p => new KeyValuePair<string, StringValues>(p.Key[(parameterGroup.Key.Length + 1)..], p.Value))));

continue;

}

parameterGroup.Where(p => p.Value.Count == 1).ForEach(p => json.Add(p.Key, JsonValue.Create(p.Value[0])));

parameterGroup.Where(p => p.Value.Count > 1).ForEach(p => json.Add(p.Key, new JsonArray(p.Value.Select(v => JsonValue.Create(v)).ToArray())));

}

return json;

}Above at line 5 query parameters are grouped by prefix, so that they can be bound to a hierarchical object tree.

Everything looks fine and we have an API which is easy to extend with functionality, it’s time to check how easy it is to be extended with application level features such as cross cutting concerns.

Authorizing Commands and Queries

Due to the fact that every request made to our API is going through the CommandDispatcher, the best way to implement cross cutting concerns to extend its capabilities using the Decorator design pattern in CommandDispatcherAuthorizer:

[DecorateDependency(typeof(ICommandDispatcher), typeof(CommandDispatcher))]

public class CommandDispatcherAuthorizer : ICommandDispatcher

{

private readonly ICommandDispatcher _inner;

private readonly IIdentityService _identityService;

public CommandDispatcherAuthorizer(ICommandDispatcher inner, IIdentityService identityService)

{

_inner = inner;

_identityService = identityService;

}

public async Task<CommandResponse> DispatchCommand<TCommand>(TCommand command) where TCommand : ICommand

{

if (!await _identityService.HasPermission(command.Permission))

return new CommandResponse { IsAuthorized = false };

return await _inner.DispatchCommand(command);

}

........................

}With the decorator pattern we can extend the existing Command dispatcher, in a way that allows enabling/disabling/replacing the authorization feature without altering the behavior of the command dispatcher.

Logging and exception handling

The same way logging and exception can also be added to our invocation pipeline, with the CommandDispatcherLogger:

[DecorateDependency(typeof(ICommandDispatcher), typeof(CommandDispatcherAuthorizer))]

public class CommandDispatcherLogger : ICommandDispatcher

{

private readonly ICommandDispatcher _inner;

private readonly ILogger _logger;

public CommandDispatcherLogger(ICommandDispatcher inner, ILogger<CommandDispatcher> logger)

{

_inner = inner;

_logger = logger;

}

public async Task<CommandResponse> DispatchCommand<TCommand>(TCommand command) where TCommand : ICommand

{

try

{

_logger.LogInformation($"Handling {typeof(TCommand).Name}");

return await _inner.DispatchCommand(command);

}

catch (Exception ex)

{

_logger.LogError(ex, $"An error occured when handling {typeof(TCommand).Name}");

throw;

}

}

............................

}Other cross cutting concerns such as auditing, caching or localization can be added later using the same approach.

Evaluating the remaining objectives

Our API exposes an Open API document, so from the caller’s perspective it’s discoverable and behaves like any traditional API regardless of our internal abstraction.

If you check the composition root of the Minimal API and Azure Function you can see that only one-time configuration is required to make this up and running with the only platform specific code related to swagger configuration for Azure functions, as it uses a different nuget package than ASP.Net Core (Azure Functions in an isolated worker process does not have this requirement).

And finally cross cutting concerns can be implemented decoupled from the application infrastructure (unlike Asp.Net middlewares), bringing a major improvement in portability and testability.

In this sequel we have seen the implementation details to build flexible APIs using the Command pattern and convention over configuration which makes it fit well in domain centric modern architecture patterns. It’s also ideal for development teams who want to focus on extending the API by extending the business domain, without the need to understand the underlying infrastructure in detail. Due to the fact that infrastructure is decoupled from the domain and it’s host-agnostic, allows it to evolve following the latest trends without impacting the business domain, which is often the main reason for applications to become technologically obsolete.