In this article I’d like to discuss how accessing application level configuration can be simplified using a convention and abstraction of the configuration model. This is part of a series of articles about how microservice architecture can be applied in a domain centric way without constantly dealing with technical aspects.

If you haven’t done it yet, I recommend checking my other articles in this series.

First of all, we should clarify what configuration and what data should be defined as part of the application setting.

Configurations is a set of data associated with application infrastructure or business domain, but iy’s not changed by the application itself. These are stored in configuration files so that they can change without the need to recompile the solution. This is particularly handy when integrating with a CI/CD where the deployment pipeline should be capable of setting application configuration depending on the deployment target.

So, configuration files are very useful and give the right amount of flexibility in comparison to hardcoded values, it is loaded once at application startup so there is no performance penalty in accessing those values whenever needed.

However, because they are basically key value pairs in text format, retrieving and materializing them in objects, introduces some extra work and requires some more attention to make sure keys are typed well. This might not seem to be a big deal, but when working with a strongly typed language and being pampered by compile time checks, these configurations could really make the ground for typos. But I admit it’s not a big deal.

But with a small effort and a convention you can get rid of them and use configuration as any dependency injected to your services.

Here is my approach that abstracts the configuration management into an object centric representation, by jumping directly to the desired state.

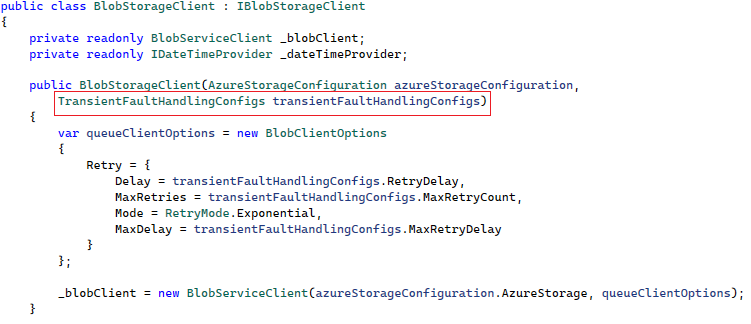

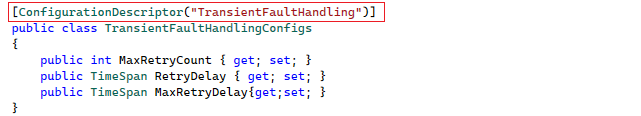

As you can see the configuration values are injected in through the constructor through the TransientFaultHandlingConfigs object, just like any other dependency, it’s strongly typed and easy to test.

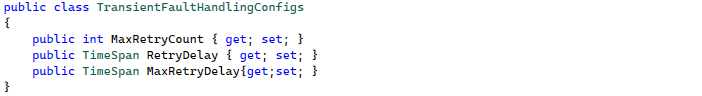

Until now, there is nothing new on the table, Microsoft.Extensions.Configuration from .Net also provides methods that are deserializing the config values to a specific object, which is a good enhancement compared to the old ConfigurationManager fom .net framework. But, when using it in its original form, you end up setting configuration bindings as part of your IOC registration at every composition root.

And here is when convention over configuration comes into play, helping to get rid of the above code. In my case the convention is that the configuration class must have an attribute called ConfigurationDescriptor receiving the configuration key as parameter in order to be registered automatically in the container.

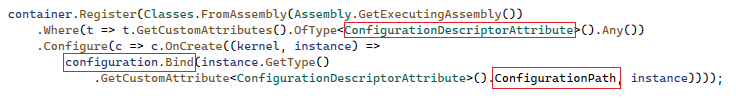

And the dependency registration applying the convention using the popular IOC container from Castle Windsor.

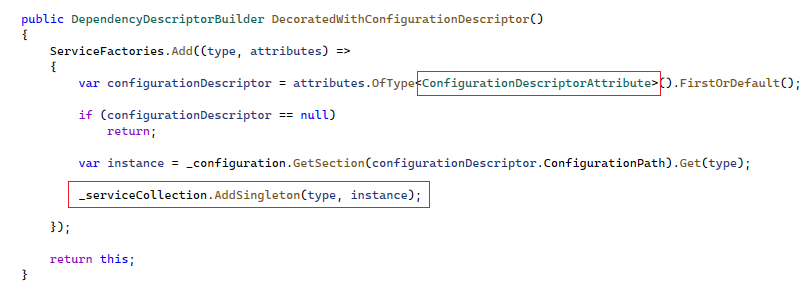

As an alternative to the above, I’m using a custom dependency registration tool built in top of Microsoft.Extensions.DependencyInjection.IserviceCollection, which iterates through the types defined in the referenced assemblies (only the ones starting with the project prefix), this is even simpler.

The custom DI tool I’ve built in top of IServiceCollection iterates once on the types defined in the application assemblies and calls all the registered service factories, for each type. The above is an example of a service factory for registering configuration objects. I found this approach very versatile especially when custom dependency registration logic has to be applied, like tenant, scope or api-version specific dependencies.

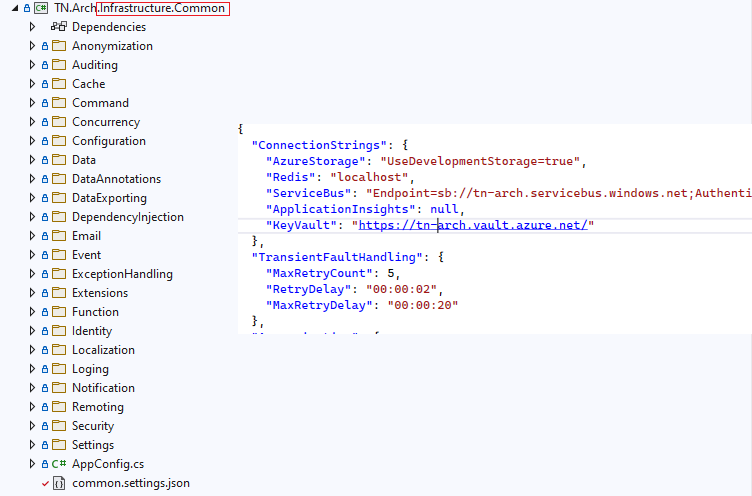

As for storing configurations I prefer json files added directly to the project where the configuration is actually used. System level configuration that applies to multiple micro services I add as linked files, following the principle don’t repeat yourself. However depending on your host, some of those will be overridden in a deployment pipeline as environment variables set by Helm or azure App Configuration.

These configuration files should not contain any sensitive data such as connection strings or credentials. Those are never tracked and committed to the remote code repository. For local environments settings files are added with the suffix .Development.json and ignored by the repository (git in my case).

The sensitive and environment specific values are substituted using a task defined in the CD pipeline, which does the transformation from its own variable definition or from a key vault.

If using Kubernetes only the values that are changing from deployment to deployment are part of a config map or secret, app specific values are kept in the json files together with the code relying on them, making it easy to run microservices locally, without the Kubernetes ecosystem.

Here is an example of a microservice specific configurations for the Application microservice:

In the above example, configurations are wrapped under the node Application, which is the name of the microservice as well, this prevents multiple microservices having the same key for different values.

In the Infrastructure.Common project there are the application level implementations such as cross cutting concerns and the related configurations in the common.settings.json containing the definition of TransientFaultHandlingConfigs from the initial example. The configs fields are outputted to the build directory, so whichever microservice is referencing the Common library will get its config file as well.

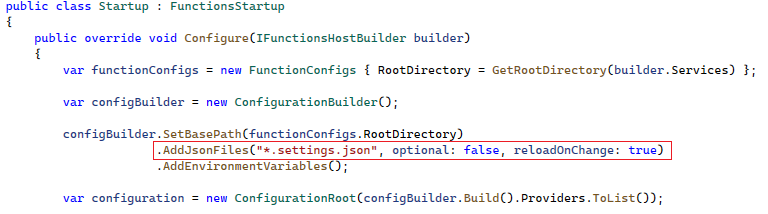

And the piece of code loading these into the IConfiguraitonRoot object at application start:

I hope with these ideas I managed to demonstrate how the technical aspects involved by configuration management can be implemented generically using abstraction and a convention. The repetitive effort when adding new configuration values is now reduced to the configuration value in a config file and the definition of a configuration object having the same structure and decorated with the ConfigurationDescriptor attribute